LaminDB

¶

¶

LaminDB is an open-source data framework for biology to query, trace, and validate datasets and models at scale. You get context & memory through a lineage-native lakehouse that understands bio-formats, registries & ontologies.

Why?

(1) Reproducing, tracing & understanding how datasets, models & results are created is critical to quality R&D. Without context, humans & agents make mistakes and cannot close feedback loops across data generation & analysis. Without memory, compute & intelligence are wasted on fragmented, non-compounding tasks.

(2) Training & fine-tuning models with thousands of datasets — across LIMS, ELNs, orthogonal assays — is now a primary path to scaling R&D. But without queryable & validated data or with data locked in organizational & infrastructure siloes, it leads to garbage in, garbage out or is quite simply impossible.

Imagine building software without git or pull requests: an agent’s quality would be impossible to verify. While code has git and tables have dbt/warehouses, biological data has lacked a framework for managing its unique complexity.

LaminDB fills the gap.

It is a lineage-native lakehouse that understands bio-registries and formats (AnnData, .zarr, …) based on the established open data stack:

Postgres/SQLite for metadata and cross-platform storage for datasets.

By offering queries, tracing & validation in a single API, LaminDB provides the context & memory to turn messy, agentic biological R&D into a scalable process.

How?

lineage → track inputs & outputs of notebooks, scripts, functions & pipelines with a single line of code

lakehouse → manage, monitor & validate schemas for standard and bio formats; query across many datasets

FAIR datasets → validate & annotate

DataFrame,AnnData,SpatialData,parquet,zarr, …LIMS & ELN → programmatic experimental design with bio-registries, ontologies & markdown notes

unified access → storage locations (local, S3, GCP, …), SQL databases (Postgres, SQLite) & ontologies

reproducible → auto-track source code & compute environments with data & code versioning

change management → branching & merging similar to git

zero lock-in → runs anywhere on open standards (Postgres, SQLite,

parquet,zarr, etc.)scalable → you hit storage & database directly through your

pydataor R stack, no REST API involvedsimple → just

pip installfrom PyPI orinstall.packages('laminr')from CRANdistributed → zero-copy & lineage-aware data sharing across infrastructure (databases & storage locations)

extensible → create custom plug-ins based on the Django ORM, the basis for LaminDB’s registries

GUI, permissions, audit logs? LaminHub is a collaboration hub built on LaminDB similar to how GitHub is built on git.

Who?

Scientists and engineers at leading research institutions and biotech companies, including:

Industry → Pfizer, Altos Labs, Ensocell Therapeutics, …

Academia & Research → scverse, DZNE (National Research Center for Neuro-Degenerative Diseases), Helmholtz Munich (National Research Center for Environmental Health), …

Research Hospitals → Global Immunological Swarm Learning Network: Harvard, MIT, Stanford, ETH Zürich, Charité, U Bonn, Mount Sinai, …

From personal research projects to pharma-scale deployments managing petabytes of data across:

entities |

OOMs |

|---|---|

observations & datasets |

10¹² & 10⁶ |

runs & transforms |

10⁹ & 10⁵ |

proteins & genes |

10⁹ & 10⁶ |

biosamples & species |

10⁵ & 10² |

… |

… |

Docs¶

Copy llms.txt into an LLM chat and let AI explain or read the docs.

Quickstart¶

Install the Python package:

pip install lamindb

Query databases¶

You can browse public databases at lamin.ai/explore. To query laminlabs/cellxgene, run:

import lamindb as ln

db = ln.DB("laminlabs/cellxgene") # a database object for queries

df = db.Artifact.to_dataframe() # a dataframe listing datasets & models

→ connected lamindb: anonymous/test-transfer

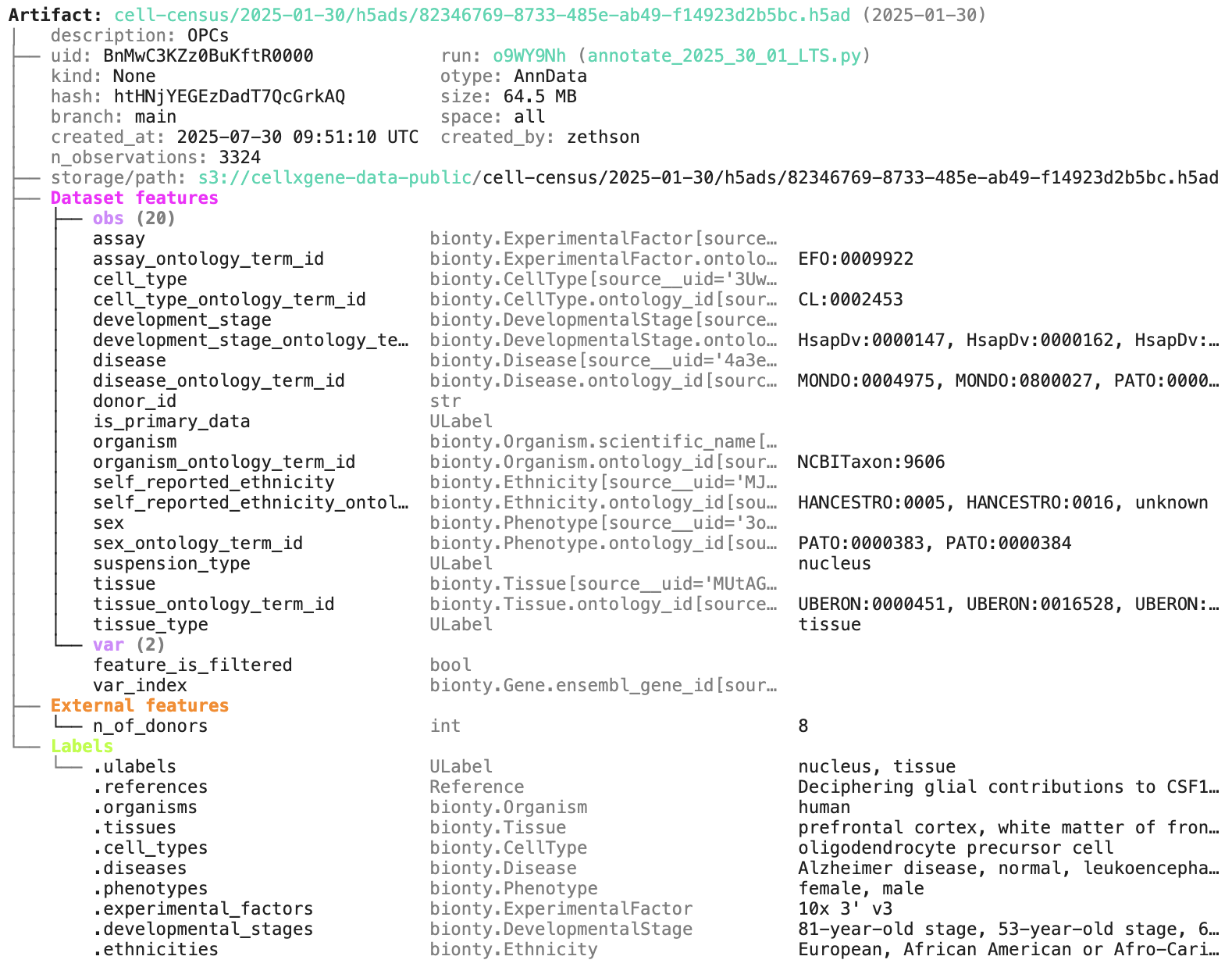

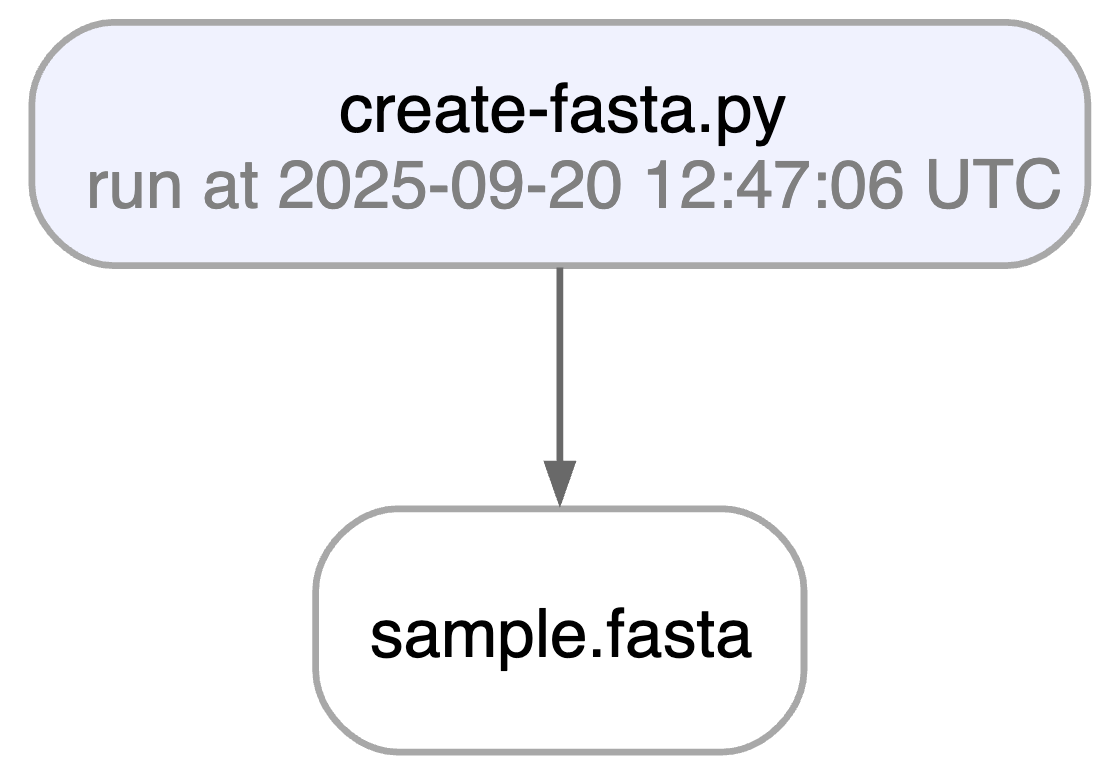

To get a specific dataset, run:

artifact = db.Artifact.get("BnMwC3KZz0BuKftR") # a metadata object for a dataset

artifact.describe() # describe the context of the dataset

Artifact: cell-census/2025-01-30/h5ads/82346769-8733-485e-ab49-f14923d2b5bc.h5ad (2025-01-30) | description: OPCs ├── uid: BnMwC3KZz0BuKftR0000 run: o9WY9Nh (annotate_2025_30_01_LTS.py) │ kind: None otype: AnnData │ hash: htHNjYEGEzDadT7QcGrkAQ size: 64.5 MB │ branch: main space: all │ created_at: 2025-07-30 09:51:10 UTC created_by: zethson │ n_observations: 3324 ├── storage/path: s3://cellxgene-data-public/cell-census/2025-01-30/h5ads/82346769-8733-485e-ab49-f14923d2b5bc.h5ad ├── Dataset features │ ├── obs (20) │ │ assay bionty.ExperimentalFactor[source__… │ │ assay_ontology_term_id bionty.ExperimentalFactor.ontology… EFO:0009922 │ │ cell_type bionty.CellType[source__uid='3Uw2V… │ │ cell_type_ontology_term_id bionty.CellType.ontology_id[source… CL:0002453 │ │ development_stage bionty.DevelopmentalStage[source__… │ │ development_stage_ontology_t… bionty.DevelopmentalStage.ontology… HsapDv:0000147, HsapDv:0000162, HsapDv… │ │ disease bionty.Disease[source__uid='4a3ejK… │ │ disease_ontology_term_id bionty.Disease.ontology_id[source_… MONDO:0004975, MONDO:0800027, PATO:000… │ │ donor_id str │ │ is_primary_data ULabel │ │ organism bionty.Organism.scientific_name[so… │ │ organism_ontology_term_id bionty.Organism.ontology_id[source… NCBITaxon:9606 │ │ self_reported_ethnicity bionty.Ethnicity[source__uid='MJRq… │ │ self_reported_ethnicity_onto… bionty.Ethnicity.ontology_id[sourc… HANCESTRO:0005, HANCESTRO:0016, unknown │ │ sex bionty.Phenotype[source__uid='3ox8… │ │ sex_ontology_term_id bionty.Phenotype.ontology_id[sourc… PATO:0000383, PATO:0000384 │ │ suspension_type ULabel nucleus │ │ tissue bionty.Tissue[source__uid='MUtAGdL… │ │ tissue_ontology_term_id bionty.Tissue.ontology_id[source__… UBERON:0000451, UBERON:0016528, UBERON… │ │ tissue_type ULabel tissue │ └── var (2) │ feature_is_filtered bool │ var_index bionty.Gene.ensembl_gene_id[source… ├── External features │ └── n_of_donors int 8 └── Labels └── .ulabels ULabel nucleus, tissue .references Reference Deciphering glial contributions to CSF… .organisms bionty.Organism human .tissues bionty.Tissue prefrontal cortex, white matter of fro… .cell_types bionty.CellType oligodendrocyte precursor cell .diseases bionty.Disease Alzheimer disease, normal, leukoenceph… .phenotypes bionty.Phenotype female, male .experimental_factors bionty.ExperimentalFactor 10x 3' v3 .developmental_stages bionty.DevelopmentalStage 81-year-old stage, 53-year-old stage, … .ethnicities bionty.Ethnicity European, African American or Afro-Car…

See the output.

Access the content of the dataset via:

local_path = artifact.cache() # return a local path from a cache

adata = artifact.load() # load object into memory

! run input wasn't tracked, call `ln.track()` and re-run

! run input wasn't tracked, call `ln.track()` and re-run

You can query by biological entities like Disease through plug-in bionty:

alzheimers = db.bionty.Disease.get(name="Alzheimer disease")

df = db.Artifact.filter(diseases=alzheimers).to_dataframe()

Configure your database¶

You can create a LaminDB instance at lamin.ai and invite collaborators. To connect to a remote instance, run:

lamin login

lamin connect account/name

If you prefer to work with a local SQLite database (no login required), run this instead:

lamin init --storage ./quickstart-data --modules bionty

On the terminal and in a Python session, LaminDB will now auto-connect.

CLI¶

To save a file or folder from the command line, run:

lamin save myfile.txt --key examples/myfile.txt

To sync a file into a local cache (artifacts) or development directory (transforms), run:

lamin load --key examples/myfile.txt

Read more: docs.lamin.ai/cli.

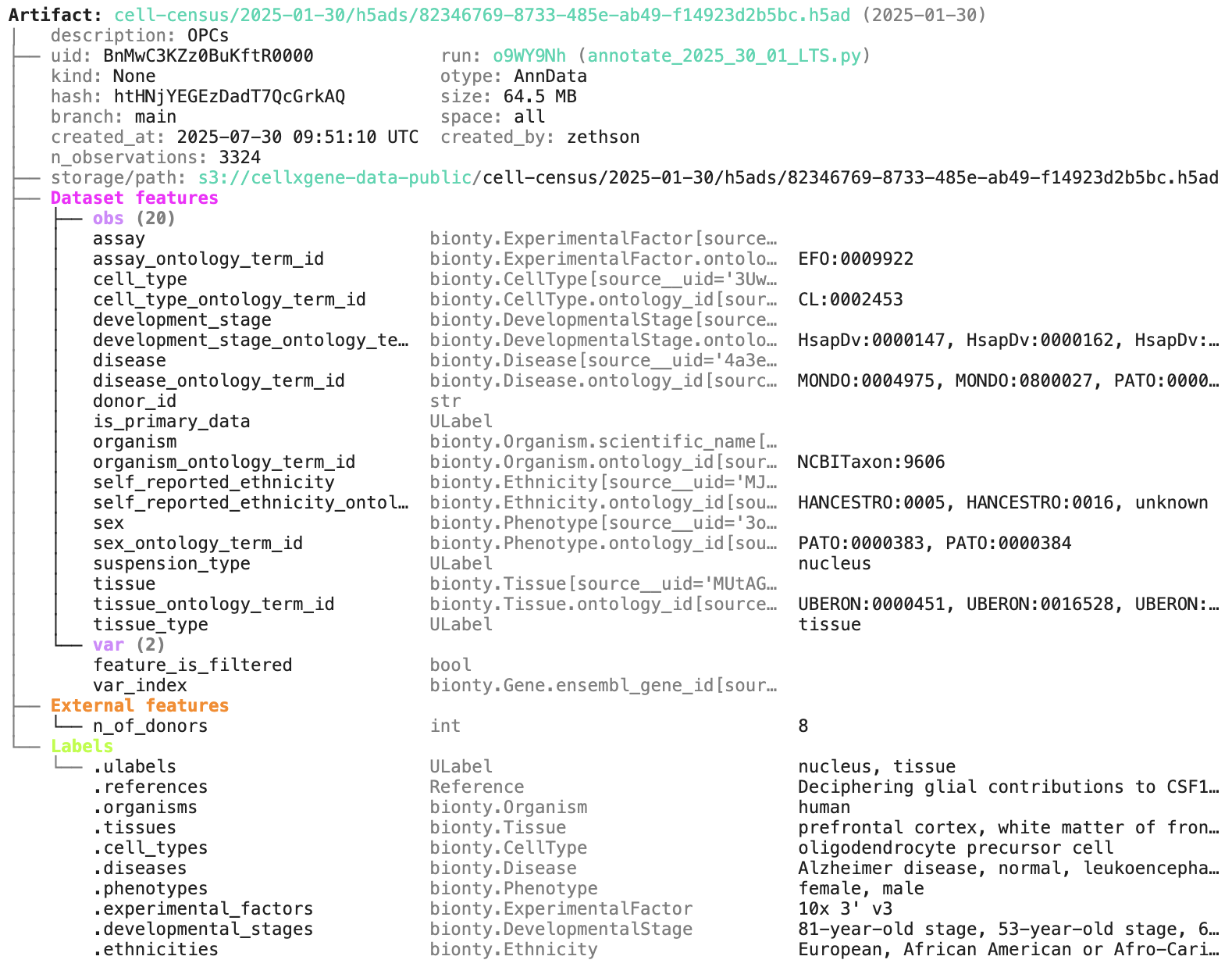

Lineage: scripts & notebooks¶

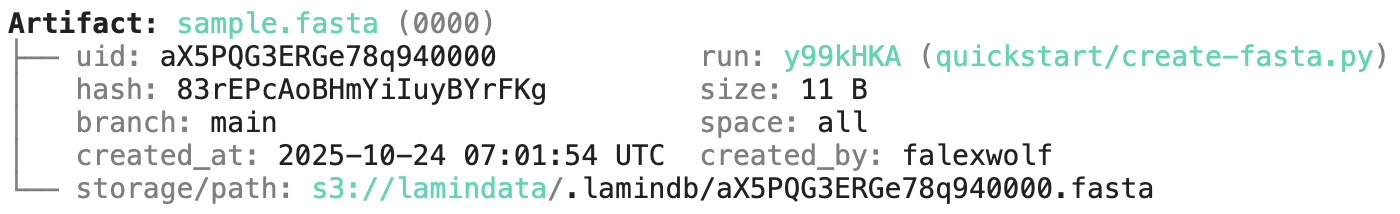

To create a dataset while tracking source code, inputs, outputs, logs, and environment:

import lamindb as ln

# → connected lamindb: account/instance

ln.track() # track code execution

open("sample.fasta", "w").write(">seq1\nACGT\n") # create dataset

ln.Artifact("sample.fasta", key="sample.fasta").save() # save dataset

ln.finish() # mark run as finished

→ created Transform('1OSqopl8UIoj0000', key='README.ipynb'), started new Run('afZptYSbw6c6AO0w') at 2026-02-05 16:19:37 UTC

→ notebook imports: anndata==0.12.2 bionty==2.1.0 lamindb==2.1.1 numpy==2.4.2 pandas==2.3.3

• recommendation: to identify the notebook across renames, pass the uid: ln.track("1OSqopl8UIoj")

! calling anonymously, will miss private instances

! cells [(4, 6), (20, 22)] were not run consecutively

→ finished Run('afZptYSbw6c6AO0w') after 2s at 2026-02-05 16:19:40 UTC

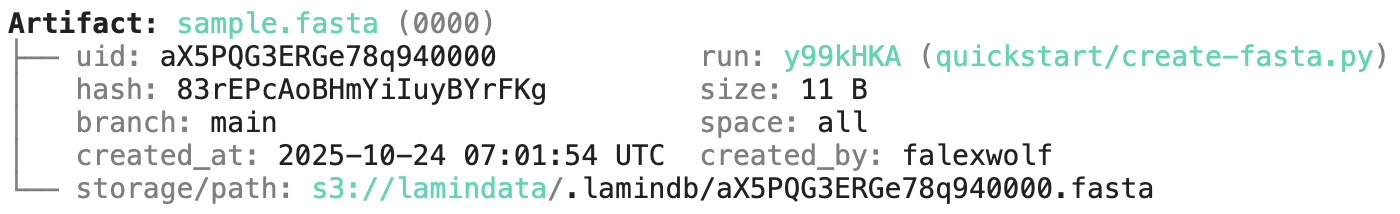

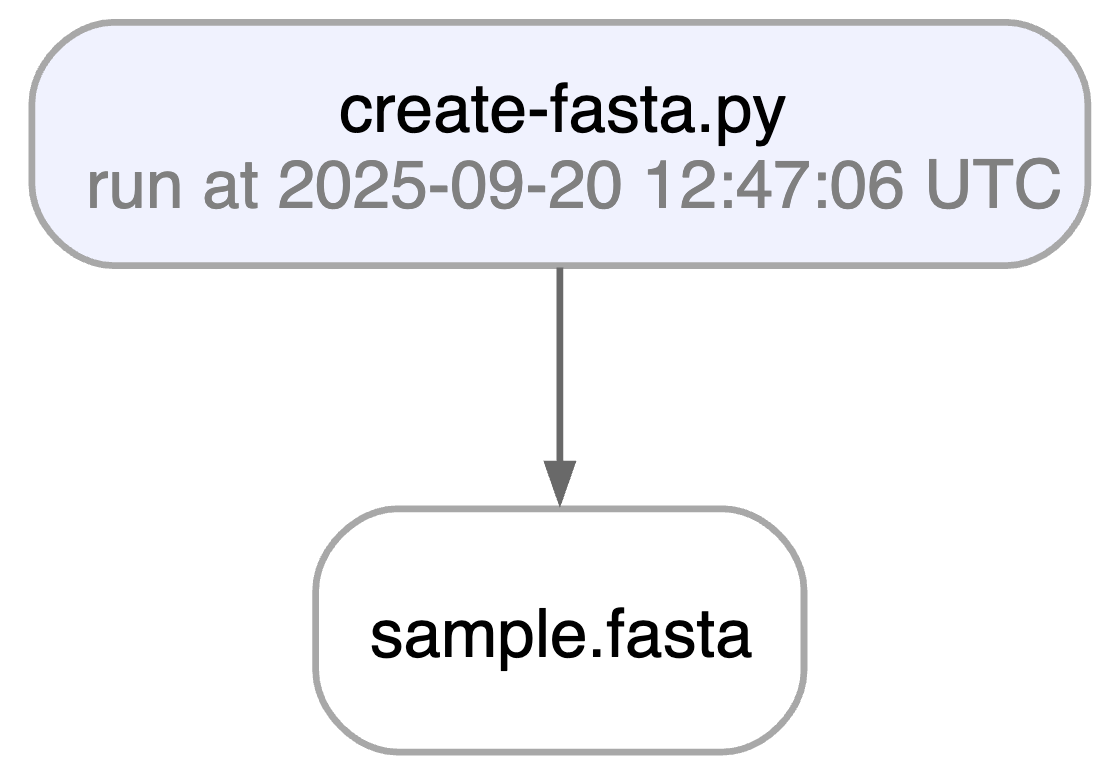

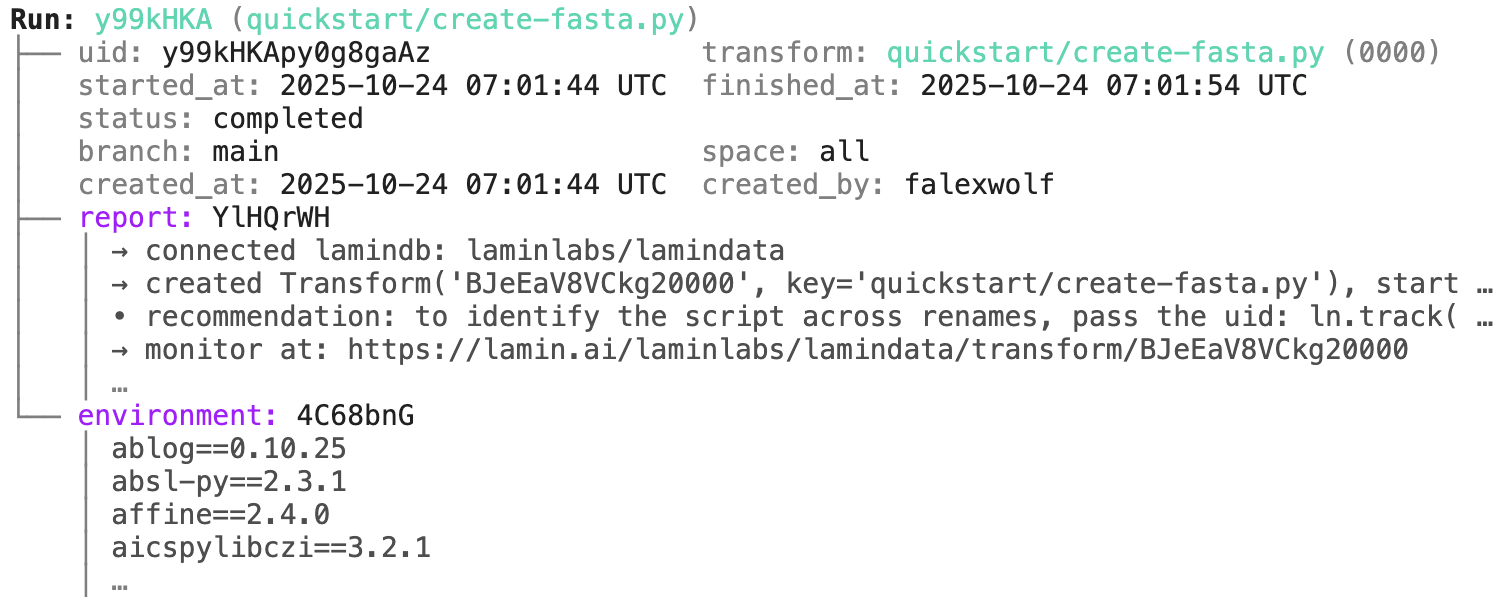

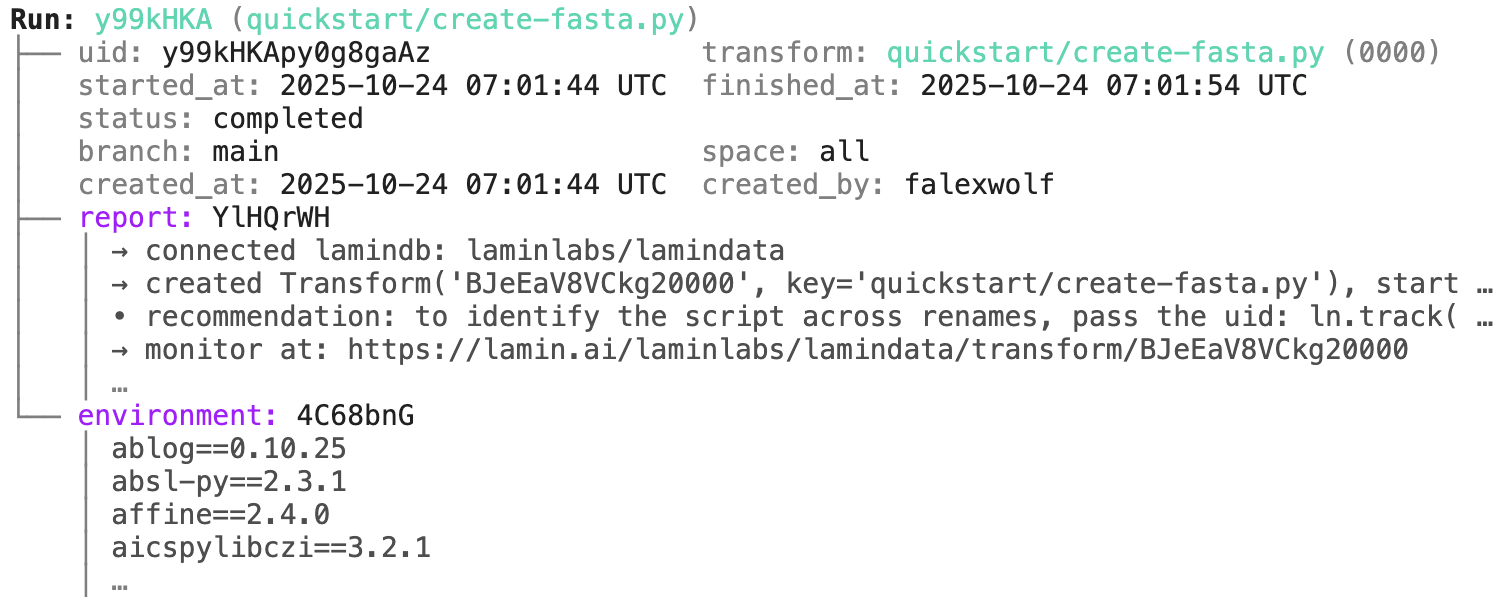

Running this snippet as a script (python create-fasta.py) produces the following data lineage:

artifact = ln.Artifact.get(key="sample.fasta") # get artifact by key

artifact.describe() # context of the artifact

artifact.view_lineage() # fine-grained lineage

Artifact: sample.fasta (0000) ├── uid: Pugv6wbg8DIZMbfv0000 run: afZptYS (README.ipynb) │ hash: 83rEPcAoBHmYiIuyBYrFKg size: 11 B │ branch: main space: all │ created_at: 2026-02-05 16:19:38 UTC created_by: anonymous └── storage/path: /home/runner/work/lamindb/lamindb/docs/test-transfer/.lamindb/Pugv6wbg8DIZMbfv0000.fasta

Access run & transform.

run = artifact.run # get the run object

transform = artifact.transform # get the transform object

run.describe() # context of the run

LaminDB is an open-source data framework for biology to query, trace, and validate datasets and models at scale. You get context & memory through a lineage-native lakehouse that understands bio-formats, registries & ontologies.

Why?

(1) Reproducing, tracing & understanding how datasets, models & results are created is critical to quality R&D. Without context, humans & agents make mistakes and cannot close feedback loops across data generation & analysis. Without memory, compute & intelligence are wasted on fragmented, non-compounding tasks.

(2) Training & fine-tuning models with thousands of datasets — across LIMS, ELNs, orthogonal assays — is now a primary path to scaling R&D. But without queryable & validated data or with data locked in organizational & infrastructure siloes, it leads to garbage in, garbage out or is quite simply impossible.

Imagine building software without git or pull requests: an agent's quality would be impossible to verify. While code has git and tables have dbt/warehouses, biological data has lacked a framework for managing its unique complexity.

LaminDB fills the gap.

It is a lineage-native lakehouse that understands bio-registries and formats (AnnData, .zarr, …) based on the established open data stack:

Postgres/SQLite for metadata and cross-platform storage for datasets.

By offering queries, tracing & validation in a single API, LaminDB provides the context & memory to turn messy, agentic biological R&D into a scalable process.

How?

- lineage → track inputs & outputs of notebooks, scripts, functions & pipelines with a single line of code

- lakehouse → manage, monitor & validate schemas for standard and bio formats; query across many datasets

- FAIR datasets → validate & annotate

DataFrame,AnnData,SpatialData,parquet,zarr, … - LIMS & ELN → programmatic experimental design with bio-registries, ontologies & markdown notes

- unified access → storage locations (local, S3, GCP, …), SQL databases (Postgres, SQLite) & ontologies

- reproducible → auto-track source code & compute environments with data & code versioning

- change management → branching & merging similar to git

- zero lock-in → runs anywhere on open standards (Postgres, SQLite,

parquet,zarr, etc.) - scalable → you hit storage & database directly through your

pydataor R stack, no REST API involved - simple → just

pip installfrom PyPI orinstall.packages('laminr')from CRAN - distributed → zero-copy & lineage-aware data sharing across infrastructure (databases & storage locations)

- integrations → git, nextflow, vitessce, redun, and more

- extensible → create custom plug-ins based on the Django ORM, the basis for LaminDB's registries

GUI, permissions, audit logs? LaminHub is a collaboration hub built on LaminDB similar to how GitHub is built on git.

Who?

Scientists and engineers at leading research institutions and biotech companies, including:

- Industry → Pfizer, Altos Labs, Ensocell Therapeutics, ...

- Academia & Research → scverse, DZNE (National Research Center for Neuro-Degenerative Diseases), Helmholtz Munich (National Research Center for Environmental Health), ...

- Research Hospitals → Global Immunological Swarm Learning Network: Harvard, MIT, Stanford, ETH Zürich, Charité, U Bonn, Mount Sinai, ...

From personal research projects to pharma-scale deployments managing petabytes of data across:

| entities | OOMs |

|---|---|

| observations & datasets | 10¹² & 10⁶ |

| runs & transforms | 10⁹ & 10⁵ |

| proteins & genes | 10⁹ & 10⁶ |

| biosamples & species | 10⁵ & 10² |

| ... | ... |

Docs

Copy llms.txt into an LLM chat and let AI explain or read the docs.

Quickstart

Install the Python package:

pip install lamindb

Query databases

You can browse public databases at lamin.ai/explore. To query laminlabs/cellxgene, run:

import lamindb as ln

db = ln.DB("laminlabs/cellxgene") # a database object for queries

df = db.Artifact.to_dataframe() # a dataframe listing datasets & models

To get a specific dataset, run:

artifact = db.Artifact.get("BnMwC3KZz0BuKftR") # a metadata object for a dataset

artifact.describe() # describe the context of the dataset

See the output.

Access the content of the dataset via:

local_path = artifact.cache() # return a local path from a cache

adata = artifact.load() # load object into memory

You can query by biological entities like Disease through plug-in bionty:

alzheimers = db.bionty.Disease.get(name="Alzheimer disease")

df = db.Artifact.filter(diseases=alzheimers).to_dataframe()

Configure your database

You can create a LaminDB instance at lamin.ai and invite collaborators. To connect to a remote instance, run:

lamin login

lamin connect account/name

If you prefer to work with a local SQLite database (no login required), run this instead:

lamin init --storage ./quickstart-data --modules bionty

On the terminal and in a Python session, LaminDB will now auto-connect.

CLI

To save a file or folder from the command line, run:

lamin save myfile.txt --key examples/myfile.txt

To sync a file into a local cache (artifacts) or development directory (transforms), run:

lamin load --key examples/myfile.txt

Read more: docs.lamin.ai/cli.

Lineage: scripts & notebooks

To create a dataset while tracking source code, inputs, outputs, logs, and environment:

import lamindb as ln

# → connected lamindb: account/instance

ln.track() # track code execution

open("sample.fasta", "w").write(">seq1\nACGT\n") # create dataset

ln.Artifact("sample.fasta", key="sample.fasta").save() # save dataset

ln.finish() # mark run as finished

Running this snippet as a script (python create-fasta.py) produces the following data lineage:

artifact = ln.Artifact.get(key="sample.fasta") # get artifact by key

artifact.describe() # context of the artifact

artifact.view_lineage() # fine-grained lineage

Access run & transform.

run = artifact.run # get the run object

transform = artifact.transform # get the transform object

run.describe() # context of the run

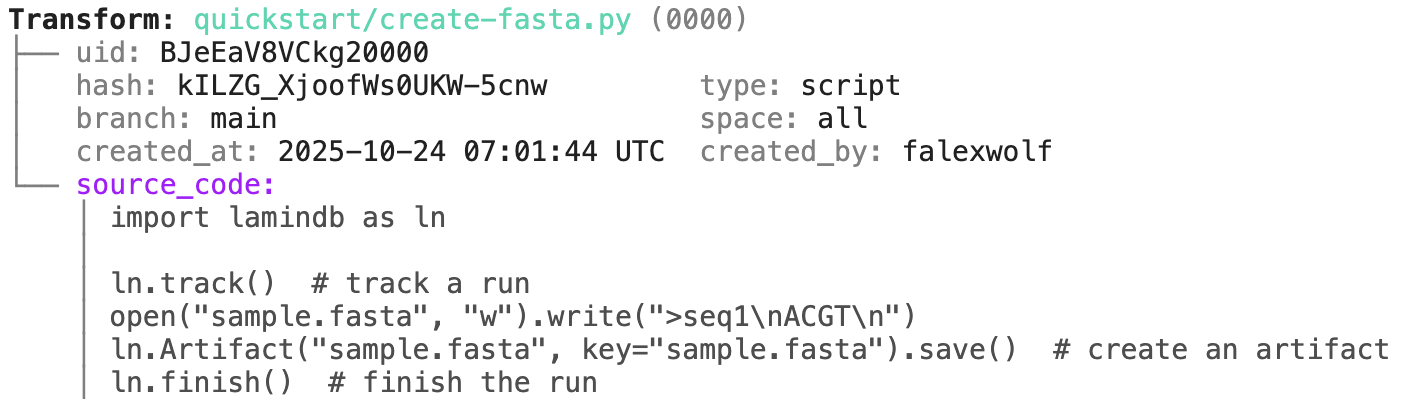

transform.describe() # context of the transform

import lamindb as ln

@ln.flow()

def create_fasta(fasta_file: str = "sample.fasta"):

open(fasta_file, "w").write(">seq1\nACGT\n") # create dataset

ln.Artifact(fasta_file, key=fasta_file).save() # save dataset

if __name__ == "__main__":

pass

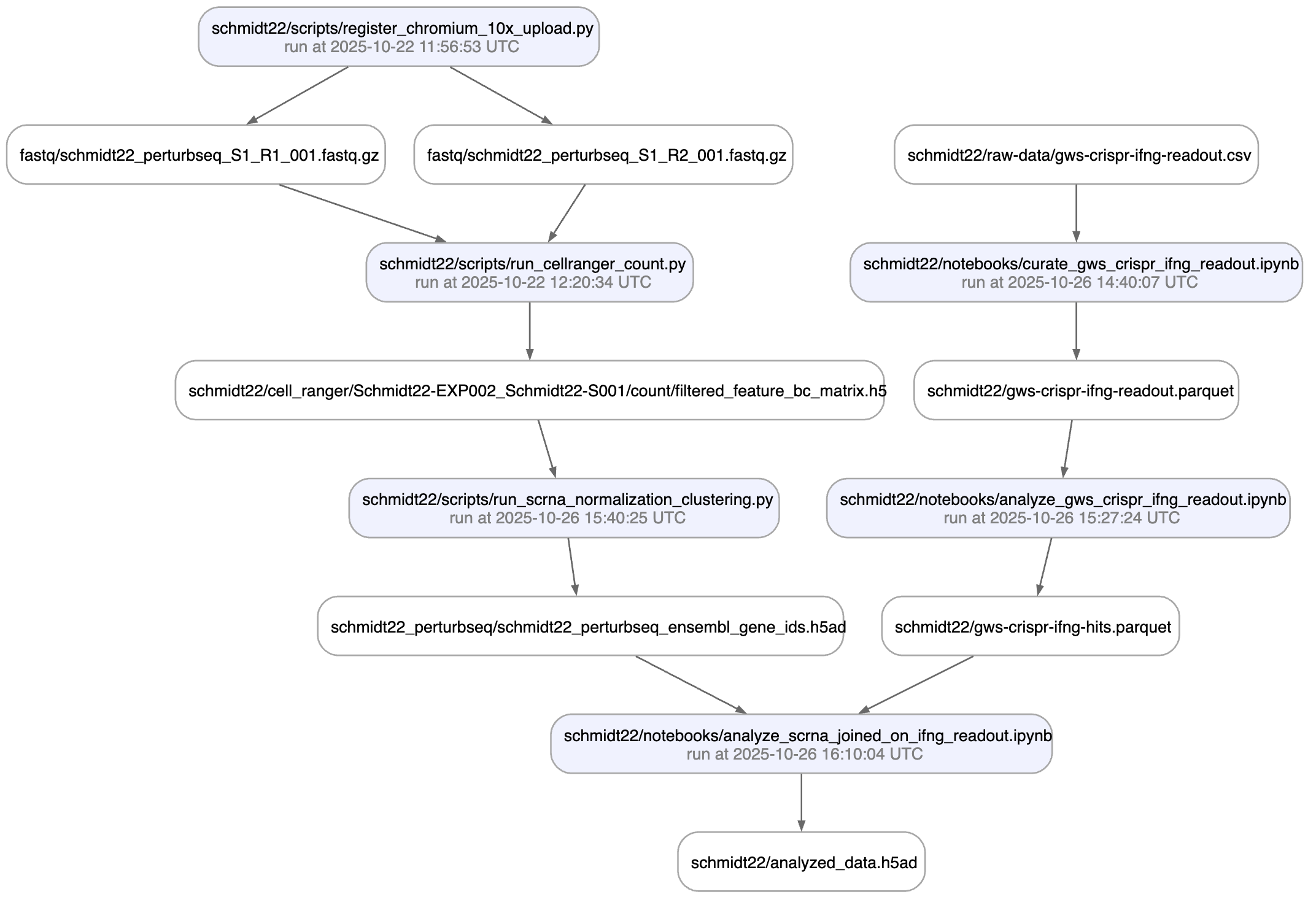

Beyond what you get for scripts & notebooks, this automatically tracks function & CLI params and integrates well with established Python workflow managers: docs.lamin.ai/track. To integrate advanced bioinformatics pipeline managers like Nextflow, see docs.lamin.ai/pipelines.

A richer example.

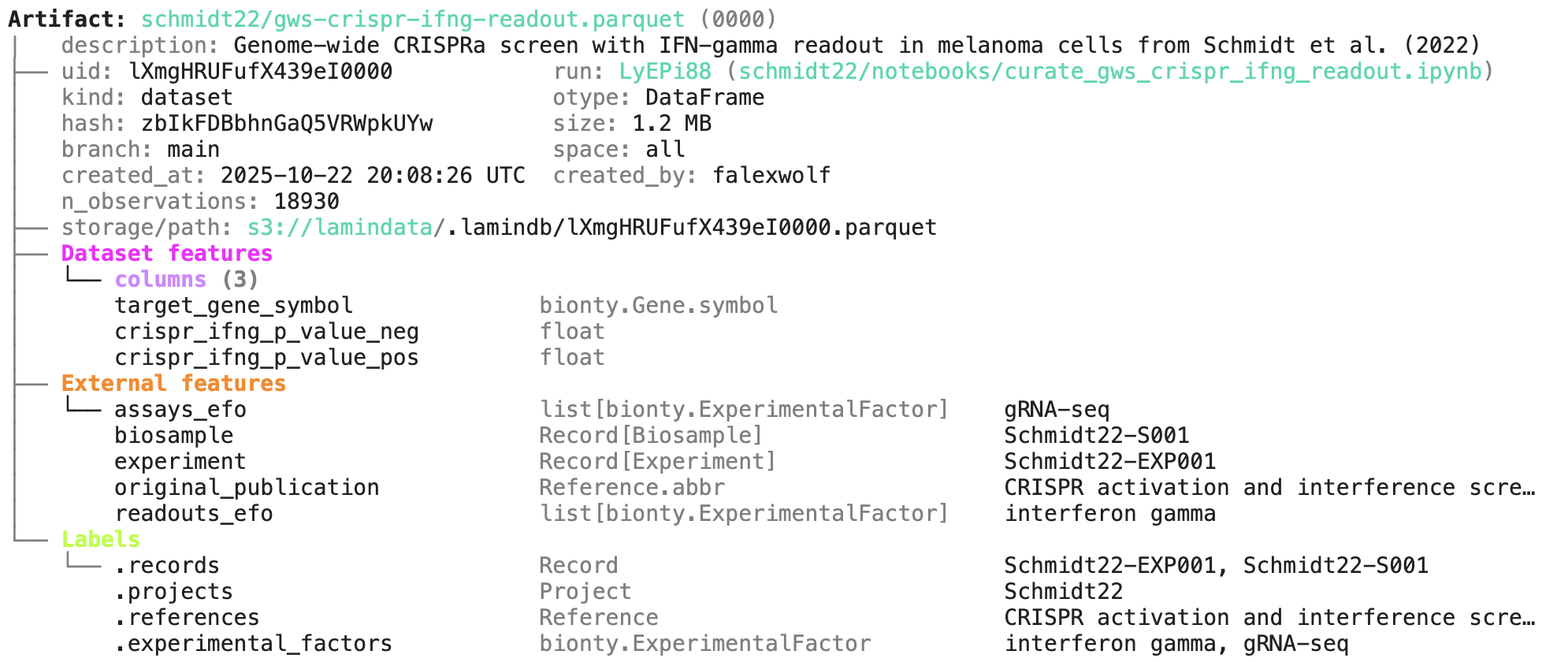

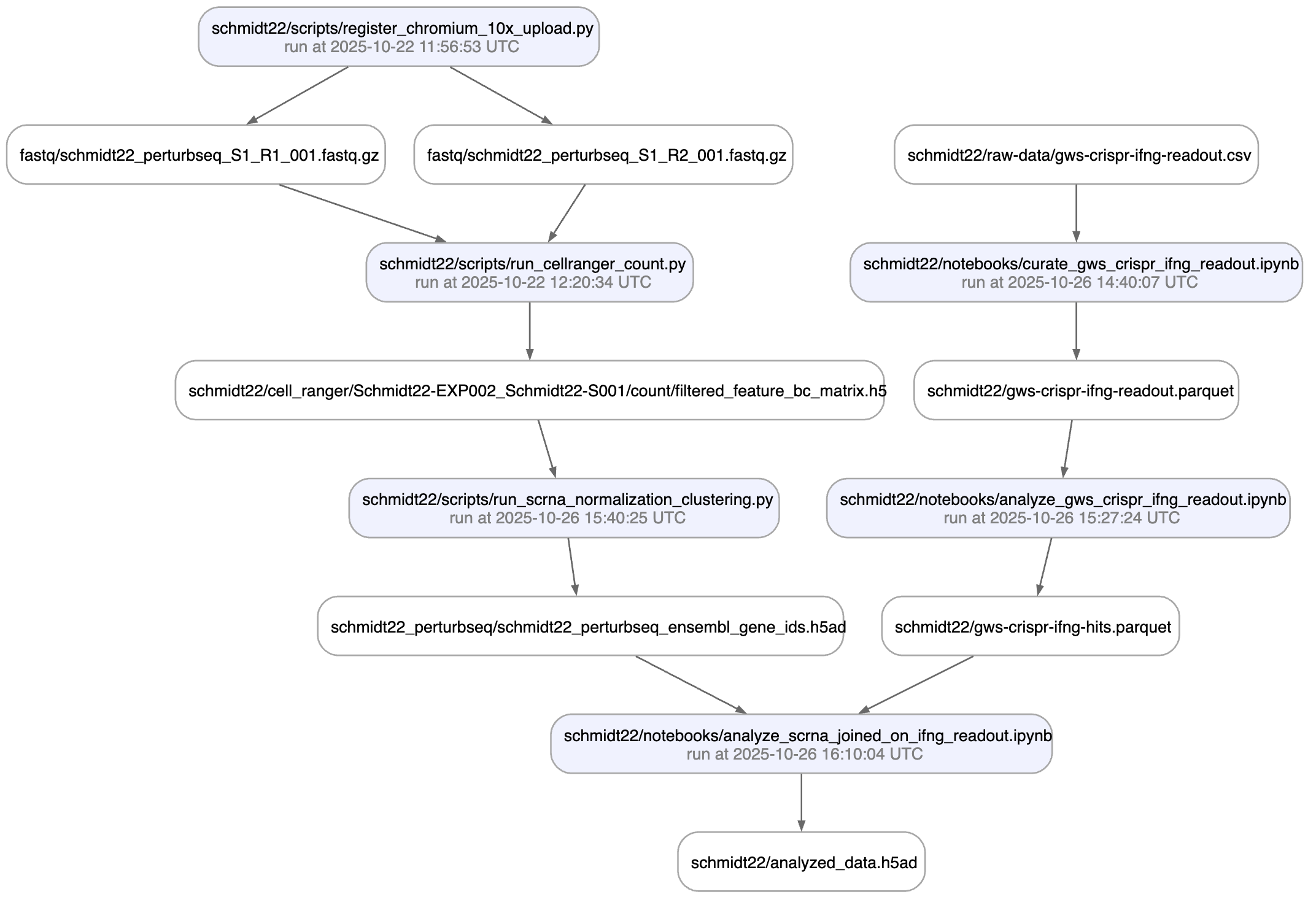

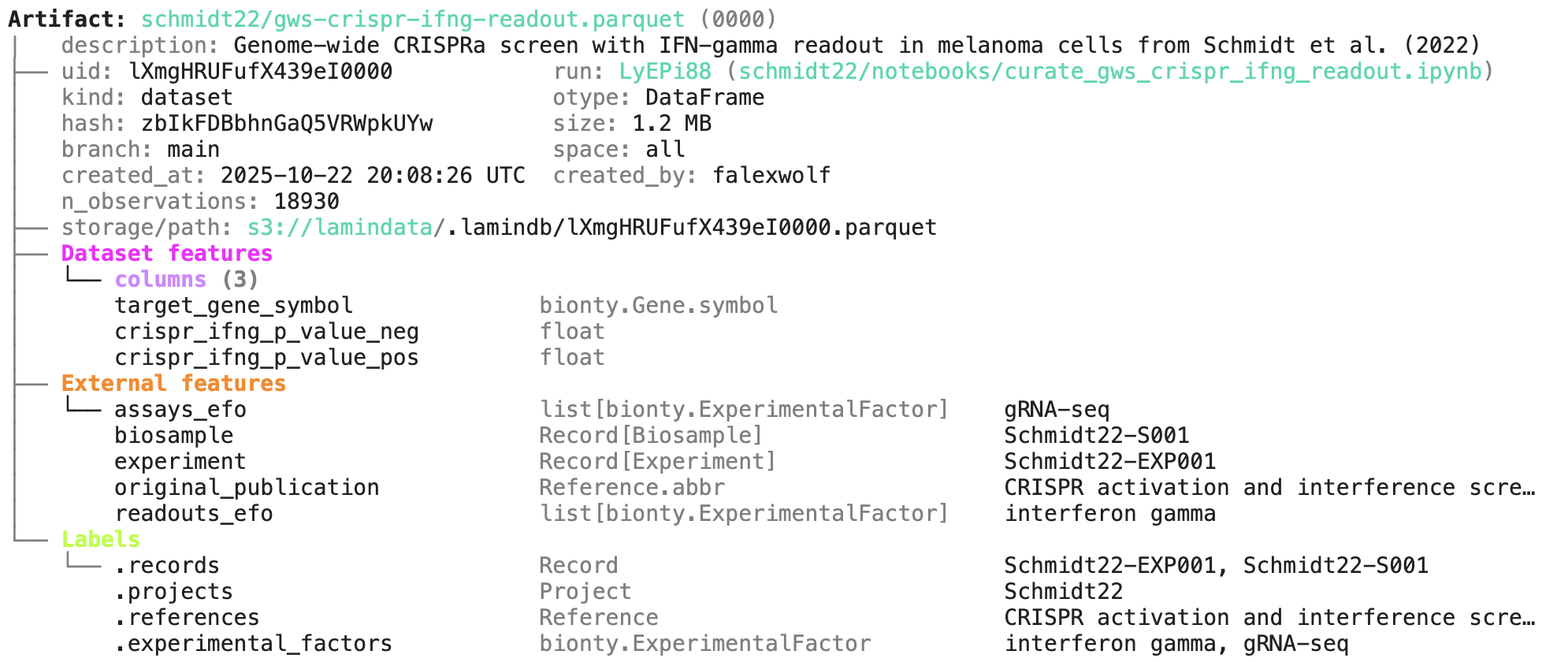

Here is a an automatically generated re-construction of the project of Schmidt el al. (Science, 2022):

A phenotypic CRISPRa screening result is integrated with scRNA-seq data. Here is the result of the screen input:

Labeling & queries by fields

You can label an artifact by running:

my_label = ln.ULabel(name="My label").save() # a universal label

project = ln.Project(name="My project").save() # a project label

artifact.ulabels.add(my_label)

artifact.projects.add(project)

Query for it:

ln.Artifact.filter(ulabels=my_label, projects=project).to_dataframe()

You can also query by the metadata that lamindb automatically collects:

ln.Artifact.filter(run=run).to_dataframe() # by creating run

ln.Artifact.filter(transform=transform).to_dataframe() # by creating transform

ln.Artifact.filter(size__gt=1e6).to_dataframe() # size greater than 1MB

If you want to include more information into the resulting dataframe, pass include.

ln.Artifact.to_dataframe(include=["created_by__name", "storage__root"]) # include fields from related registries

from datetime import date

ln.Feature(name="gc_content", dtype=float).save()

ln.Feature(name="experiment_note", dtype=str).save()

ln.Feature(name="experiment_date", dtype=date, coerce=True).save() # accept date strings

During annotation, feature names and data types are validated against these definitions:

artifact.features.add_values({

"gc_content": 0.55,

"experiment_note": "Looks great",

"experiment_date": "2025-10-24",

})

Query for it:

ln.Artifact.filter(experiment_date="2025-10-24").to_dataframe() # query all artifacts annotated with `experiment_date`

If you want to include the feature values into the dataframe, pass include.

ln.Artifact.to_dataframe(include="features") # include the feature annotations

sample = ln.Record(name="Sample", is_type=True).save() # create entity type: Sample

ln.Record(name="P53mutant1", type=sample).save() # sample 1

ln.Record(name="P53mutant2", type=sample).save() # sample 2

Define features and annotate an artifact with a sample:

ln.Feature(name="design_sample", dtype=sample).save()

artifact.features.add_values({"design_sample": "P53mutant1"})

You can query & search the Record registry in the same way as Artifact or Run.

ln.Record.search("p53").to_dataframe()

import lamindb as ln

ln.track()

open("sample.fasta", "w").write(">seq1\nTGCA\n") # a new sequence

ln.Artifact("sample.fasta", key="sample.fasta", features={"design_sample": "P53mutant1"}).save() # annotate with the new sample

ln.finish()

If you now query by key, you'll get the latest version of this artifact with the latest version of the source code linked with previous versions of artifact and source code are easily queryable:

artifact = ln.Artifact.get(key="sample.fasta") # get artifact by key

artifact.versions.to_dataframe() # see all versions of that artifact

import pandas as pd

df = pd.DataFrame({

"sequence_str": ["ACGT", "TGCA"],

"gc_content": [0.55, 0.54],

"experiment_note": ["Looks great", "Ok"],

"experiment_date": [date(2025, 10, 24), date(2025, 10, 25)],

})

ln.Artifact.from_dataframe(df, key="my_datasets/sequences.parquet").save() # no validation

To validate & annotate the content of the dataframe, use the built-in schema valid_features:

ln.Feature(name="sequence_str", dtype=str).save() # define a remaining feature

artifact = ln.Artifact.from_dataframe(

df,

key="my_datasets/sequences.parquet",

schema="valid_features" # validate columns against features

).save()

artifact.describe()

import anndata as ad

import numpy as np

adata = ad.AnnData(

X=pd.DataFrame([[1]*10]*21).values,

obs=pd.DataFrame({'cell_type_by_model': ['T cell', 'B cell', 'NK cell'] * 7}),

var=pd.DataFrame(index=[f'ENSG{i:011d}' for i in range(10)])

)

artifact = ln.Artifact.from_anndata(

adata,

key="my_datasets/scrna.h5ad",

schema="ensembl_gene_ids_and_valid_features_in_obs"

)

artifact.describe()

To validate a spatialdata or any other array-like dataset, you need to construct a Schema. You can do this by composing simple pandera-style schemas: docs.lamin.ai/curate.

Ontologies

Plugin bionty gives you >20 public ontologies as SQLRecord registries. This was used to validate the ENSG ids in the adata just before.

import bionty as bt

bt.CellType.import_source() # import the default ontology

bt.CellType.to_dataframe() # your extendable cell type ontology in a simple registry

Read more: docs.lamin.ai/manage-ontologies.

Run: afZptYS (README.ipynb) ├── uid: afZptYSbw6c… transform: README.ipynb (0000) │ | description: LaminDB [](https://docs.l… │ started_at: 2026… finished_at: 2026-02-05 16:19:40 UTC │ status: completed │ branch: main space: all │ created_at: 2026… created_by: anonymous └── environment: 3i6mXkn │ aiobotocore==2.26.0 │ aiohappyeyeballs==2.6.1 │ aiohttp==3.13.3 │ aioitertools==0.13.0 │ …

transform.describe() # context of the transform

Transform: README.ipynb (0000) | description: LaminDB [](https://docs.lamin.ai) [](https://docs.lamin.ai/llms.txt) [](https://codecov. io/gh/laminlabs/lamindb) [](https://pypi.org/project/lamindb) [](https://cran.r-project.org/package=laminr) [](https:// github.com/laminlabs/lamindb) [](https://pepy.tech/project/lamindb) ├── uid: 1OSqopl8UIoj0000 │ hash: LPEWGk_HPJjXjXe6A0V0wg type: notebook │ branch: main space: all │ created_at: 2026-02-05 16:19:37 UTC created_by: anonymous └── source_code: │ # %% [markdown] │ # │ # │ # LaminDB is an open-source data framework for biology to query, trace, and vali … │ # You get context & memory through a lineage-native lakehouse that understands b … │ # │ # <details> │ # <summary>Why?</summary> │ # │ # (1) Reproducing, tracing & understanding how datasets, models & results are cr … │ # Without context, humans & agents make mistakes and cannot close feedback loops … │ # Without memory, compute & intelligence are wasted on fragmented, non-compoundi … │ # │ # (2) Training & fine-tuning models with thousands of datasets — across LIMS, EL … │ # But without queryable & validated data or with data locked in organizational & … │ # │ # Imagine building software without git or pull requests: an agent's quality wou … │ # While code has git and tables have dbt/warehouses, biological data has lacked … │ # │ # LaminDB fills the gap. │ # It is a lineage-native lakehouse that understands bio-registries and formats ( … │ # Postgres/SQLite for metadata and cross-platform storage for datasets. │ # By offering queries, tracing & validation in a single API, LaminDB provides th … │ # │ # </details> │ # │ # <img width="800px" src="https://lamin-site-assets.s3.amazonaws.com/.lamindb/Bu … │ # │ # How? │ # │ …

Lineage: functions & workflows¶

You can achieve the same traceability for functions & workflows:

import lamindb as ln

@ln.flow()

def create_fasta(fasta_file: str = "sample.fasta"):

open(fasta_file, "w").write(">seq1\nACGT\n") # create dataset

ln.Artifact(fasta_file, key=fasta_file).save() # save dataset

if __name__ == "__main__":

pass

Beyond what you get for scripts & notebooks, this automatically tracks function & CLI params and integrates well with established Python workflow managers: docs.lamin.ai/track. To integrate advanced bioinformatics pipeline managers like Nextflow, see docs.lamin.ai/pipelines.

A richer example.

Here is a an automatically generated re-construction of the project of Schmidt el al. (Science, 2022):

A phenotypic CRISPRa screening result is integrated with scRNA-seq data. Here is the result of the screen input:

Labeling & queries by fields¶

You can label an artifact by running:

my_label = ln.ULabel(name="My label").save() # a universal label

project = ln.Project(name="My project").save() # a project label

artifact.ulabels.add(my_label)

artifact.projects.add(project)

Query for it:

ln.Artifact.filter(ulabels=my_label, projects=project).to_dataframe()

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | version_tag | is_latest | is_locked | created_at | branch_id | space_id | storage_id | run_id | schema_id | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||||||||

| 2 | Pugv6wbg8DIZMbfv0000 | sample.fasta | None | .fasta | None | None | 11 | 83rEPcAoBHmYiIuyBYrFKg | None | None | None | True | False | 2026-02-05 16:19:38.594000+00:00 | 1 | 1 | 3 | 3 | None | 3 |

You can also query by the metadata that lamindb automatically collects:

ln.Artifact.filter(run=run).to_dataframe() # by creating run

ln.Artifact.filter(transform=transform).to_dataframe() # by creating transform

ln.Artifact.filter(size__gt=1e6).to_dataframe() # size greater than 1MB

| uid | id | key | description | suffix | kind | otype | size | hash | n_files | n_observations | version_tag | is_latest | is_locked | created_at | branch_id | space_id | storage_id | run_id | schema_id | created_by_id |

|---|

If you want to include more information into the resulting dataframe, pass include.

ln.Artifact.to_dataframe(include=["created_by__name", "storage__root"]) # include fields from related registries

| uid | key | created_by__name | storage__root | |

|---|---|---|---|---|

| id | ||||

| 2 | Pugv6wbg8DIZMbfv0000 | sample.fasta | None | /home/runner/work/lamindb/lamindb/docs/test-tr... |

| 1 | 9K1dteZ6Qx0EXK8g0000 | example_datasets/mini_immuno/dataset1.h5ad | None | s3://lamindata |

Note: The query syntax for DB objects and for your default database is the same.

Queries by features¶

You can annotate datasets and samples with features. Let’s define some:

from datetime import date

ln.Feature(name="gc_content", dtype=float).save()

ln.Feature(name="experiment_note", dtype=str).save()

ln.Feature(name="experiment_date", dtype=date, coerce=True).save() # accept date strings

Feature(uid='Sbn2FEoKtDWT', is_type=False, name='experiment_date', _dtype_str='date', unit=None, description=None, array_rank=0, array_size=0, array_shape=None, synonyms=None, default_value=None, nullable=True, coerce=True, branch_id=1, space_id=1, created_by_id=3, run_id=None, type_id=None, created_at=2026-02-05 16:19:40 UTC, is_locked=False)

During annotation, feature names and data types are validated against these definitions:

artifact.features.add_values({

"gc_content": 0.55,

"experiment_note": "Looks great",

"experiment_date": "2025-10-24",

})

✓ "columns" is validated against Feature.name

Query for it:

ln.Artifact.filter(experiment_date="2025-10-24").to_dataframe() # query all artifacts annotated with `experiment_date`

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | version_tag | is_latest | is_locked | created_at | branch_id | space_id | storage_id | run_id | schema_id | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||||||||

| 2 | Pugv6wbg8DIZMbfv0000 | sample.fasta | None | .fasta | None | None | 11 | 83rEPcAoBHmYiIuyBYrFKg | None | None | None | True | False | 2026-02-05 16:19:38.594000+00:00 | 1 | 1 | 3 | 3 | None | 3 |

If you want to include the feature values into the dataframe, pass include.

ln.Artifact.to_dataframe(include="features") # include the feature annotations

→ queried for all categorical features of dtypes Record or ULabel and non-categorical features: (9) ['concentration', 'treatment_time_h', 'sample_note', 'donor', 'perturbation', 'experiment', 'gc_content', 'experiment_note', 'experiment_date']

| uid | key | perturbation | experiment | gc_content | experiment_note | experiment_date | |

|---|---|---|---|---|---|---|---|

| id | |||||||

| 2 | Pugv6wbg8DIZMbfv0000 | sample.fasta | NaN | NaN | 0.55 | Looks great | 2025-10-24 |

| 1 | 9K1dteZ6Qx0EXK8g0000 | example_datasets/mini_immuno/dataset1.h5ad | {DMSO, IFNG} | Experiment 1 | NaN | NaN | NaT |

Lake ♾️ LIMS ♾️ Sheets¶

You can create records for the entities underlying your experiments: samples, perturbations, instruments, etc., for example:

sample = ln.Record(name="Sample", is_type=True).save() # create entity type: Sample

ln.Record(name="P53mutant1", type=sample).save() # sample 1

ln.Record(name="P53mutant2", type=sample).save() # sample 2

! you are trying to create a record with name='P53mutant2' but a record with similar name exists: 'P53mutant1'. Did you mean to load it?

Record(uid='Ek7z25icx3veD6sN', is_type=False, name='P53mutant2', description=None, reference=None, reference_type=None, extra_data=None, branch_id=1, space_id=1, created_by_id=3, type_id=4, schema_id=None, run_id=None, created_at=2026-02-05 16:19:41 UTC, is_locked=False)

Define features and annotate an artifact with a sample:

ln.Feature(name="design_sample", dtype=sample).save()

artifact.features.add_values({"design_sample": "P53mutant1"})

✓ "columns" is validated against Feature.name

✓ "design_sample" is validated against Record.name

You can query & search the Record registry in the same way as Artifact or Run.

ln.Record.search("p53").to_dataframe()

| uid | name | description | reference | reference_type | extra_data | is_locked | is_type | created_at | branch_id | space_id | created_by_id | type_id | schema_id | run_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||

| 5 | CLdaBcPQlgHxF99g | P53mutant1 | None | None | None | None | False | False | 2026-02-05 16:19:41.047000+00:00 | 1 | 1 | 3 | 4 | None | None |

| 6 | Ek7z25icx3veD6sN | P53mutant2 | None | None | None | None | False | False | 2026-02-05 16:19:41.060000+00:00 | 1 | 1 | 3 | 4 | None | None |

You can also create relationships of entities and edit them like Excel sheets in a GUI via LaminHub.

Data versioning¶

If you change source code or datasets, LaminDB manages versioning for you.

Assume you run a new version of our create-fasta.py script to create a new version of sample.fasta.

import lamindb as ln

ln.track()

open("sample.fasta", "w").write(">seq1\nTGCA\n") # a new sequence

ln.Artifact("sample.fasta", key="sample.fasta", features={"design_sample": "P53mutant1"}).save() # annotate with the new sample

ln.finish()

→ found notebook README.ipynb, making new version -- anticipating changes

→ created Transform('1OSqopl8UIoj0001', key='README.ipynb'), started new Run('lSRcFsIyClUCKSw7') at 2026-02-05 16:19:41 UTC

→ notebook imports: anndata==0.12.2 bionty==2.1.0 lamindb==2.1.1 numpy==2.4.2 pandas==2.3.3

• recommendation: to identify the notebook across renames, pass the uid: ln.track("1OSqopl8UIoj")

→ creating new artifact version for key 'sample.fasta' in storage '/home/runner/work/lamindb/lamindb/docs/test-transfer'

! cells [(4, 6), (20, 22)] were not run consecutively

→ returning artifact with same hash: Artifact(uid='wBn4PiuTCjmyhh4A0000', version_tag=None, is_latest=True, key=None, description='Report of run afZptYSbw6c6AO0w', suffix='.html', kind='__lamindb_run__', otype=None, size=336730, hash='YHgXH7f1m8yVE0ETuqAPjA', n_files=None, n_observations=None, branch_id=1, space_id=1, storage_id=3, run_id=None, schema_id=None, created_by_id=3, created_at=2026-02-05 16:19:40 UTC, is_locked=False); to track this artifact as an input, use: ln.Artifact.get()

! run was not set on Artifact(uid='wBn4PiuTCjmyhh4A0000', version_tag=None, is_latest=True, key=None, description='Report of run afZptYSbw6c6AO0w', suffix='.html', kind='__lamindb_run__', otype=None, size=336730, hash='YHgXH7f1m8yVE0ETuqAPjA', n_files=None, n_observations=None, branch_id=1, space_id=1, storage_id=3, run_id=None, schema_id=None, created_by_id=3, created_at=2026-02-05 16:19:40 UTC, is_locked=False), setting to current run

! updated description from Report of run afZptYSbw6c6AO0w to Report of run lSRcFsIyClUCKSw7

! returning transform with same hash & key: Transform(uid='1OSqopl8UIoj0000', version_tag=None, is_latest=False, key='README.ipynb', description='LaminDB [](https://docs.lamin.ai) [](https://docs.lamin.ai/llms.txt) [](https://codecov.io/gh/laminlabs/lamindb) [](https://pypi.org/project/lamindb) [](https://cran.r-project.org/package=laminr) [](https://github.com/laminlabs/lamindb) [](https://pepy.tech/project/lamindb)', kind='notebook', hash='LPEWGk_HPJjXjXe6A0V0wg', reference=None, reference_type=None, environment=None, branch_id=1, space_id=1, created_by_id=3, created_at=2026-02-05 16:19:37 UTC, is_locked=False)

• new latest Transform version is: 1OSqopl8UIoj0000

→ finished Run('lSRcFsIyClUCKSw7') after 1s at 2026-02-05 16:19:42 UTC

If you now query by key, you’ll get the latest version of this artifact with the latest version of the source code linked with previous versions of artifact and source code are easily queryable:

artifact = ln.Artifact.get(key="sample.fasta") # get artifact by key

artifact.versions.to_dataframe() # see all versions of that artifact

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | version_tag | is_latest | is_locked | created_at | branch_id | space_id | storage_id | run_id | schema_id | created_by_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||||||||

| 5 | Pugv6wbg8DIZMbfv0001 | sample.fasta | None | .fasta | None | None | 11 | aqvq4CskQu3Nnr3hl5r3ug | None | None | None | True | False | 2026-02-05 16:19:41.942000+00:00 | 1 | 1 | 3 | 4 | None | 3 |

| 2 | Pugv6wbg8DIZMbfv0000 | sample.fasta | None | .fasta | None | None | 11 | 83rEPcAoBHmYiIuyBYrFKg | None | None | None | False | False | 2026-02-05 16:19:38.594000+00:00 | 1 | 1 | 3 | 3 | None | 3 |

Lakehouse ♾️ feature store¶

Here is how you ingest a DataFrame:

import pandas as pd

df = pd.DataFrame({

"sequence_str": ["ACGT", "TGCA"],

"gc_content": [0.55, 0.54],

"experiment_note": ["Looks great", "Ok"],

"experiment_date": [date(2025, 10, 24), date(2025, 10, 25)],

})

ln.Artifact.from_dataframe(df, key="my_datasets/sequences.parquet").save() # no validation

→ writing the in-memory object into cache

Artifact(uid='gDZKqz5Z0cqewW2x0000', version_tag=None, is_latest=True, key='my_datasets/sequences.parquet', description=None, suffix='.parquet', kind='dataset', otype='DataFrame', size=3405, hash='XHWWD_cePb1MV2pgSS0Ecg', n_files=None, n_observations=2, branch_id=1, space_id=1, storage_id=3, run_id=None, schema_id=None, created_by_id=3, created_at=2026-02-05 16:19:42 UTC, is_locked=False)

To validate & annotate the content of the dataframe, use the built-in schema valid_features:

ln.Feature(name="sequence_str", dtype=str).save() # define a remaining feature

artifact = ln.Artifact.from_dataframe(

df,

key="my_datasets/sequences.parquet",

schema="valid_features" # validate columns against features

).save()

artifact.describe()

! you are trying to create a record with name='valid_features' but a record with similar name exists: 'anndata_ensembl_gene_ids_and_valid_features_in_obs'. Did you mean to load it?

→ writing the in-memory object into cache

→ returning artifact with same hash: Artifact(uid='gDZKqz5Z0cqewW2x0000', version_tag=None, is_latest=True, key='my_datasets/sequences.parquet', description=None, suffix='.parquet', kind='dataset', otype='DataFrame', size=3405, hash='XHWWD_cePb1MV2pgSS0Ecg', n_files=None, n_observations=2, branch_id=1, space_id=1, storage_id=3, run_id=None, schema_id=None, created_by_id=3, created_at=2026-02-05 16:19:42 UTC, is_locked=False); to track this artifact as an input, use: ln.Artifact.get()

→ loading artifact into memory for validation

✓ "columns" is validated against Feature.name

Artifact: my_datasets/sequences.parquet (0000) ├── uid: gDZKqz5Z0cqewW2x0000 run: │ kind: dataset otype: DataFrame │ hash: XHWWD_cePb1MV2pgSS0Ecg size: 3.3 KB │ branch: main space: all │ created_at: 2026-02-05 16:19:42 UTC created_by: anonymous │ n_observations: 2 ├── storage/path: /home/runner/work/lamindb/lamindb/docs/test-transfer/.lamindb/gDZKqz5Z0cqewW2x0000.parquet └── Dataset features └── columns (4) experiment_date date experiment_note str gc_content float sequence_str str

You can filter for datasets by schema and then launch distributed queries and batch loading.

Lakehouse beyond tables¶

To validate an AnnData with built-in schema ensembl_gene_ids_and_valid_features_in_obs, call:

import anndata as ad

import numpy as np

adata = ad.AnnData(

X=pd.DataFrame([[1]*10]*21).values,

obs=pd.DataFrame({'cell_type_by_model': ['T cell', 'B cell', 'NK cell'] * 7}),

var=pd.DataFrame(index=[f'ENSG{i:011d}' for i in range(10)])

)

artifact = ln.Artifact.from_anndata(

adata,

key="my_datasets/scrna.h5ad",

schema="ensembl_gene_ids_and_valid_features_in_obs"

)

artifact.describe()

→ writing the in-memory object into cache

→ loading artifact into memory for validation

/opt/hostedtoolcache/Python/3.13.11/x64/lib/python3.13/functools.py:934: ImplicitModificationWarning: Transforming to str index.

return dispatch(args[0].__class__)(*args, **kw)

Artifact: my_datasets/scrna.h5ad (0000) ├── uid: ieJvuTvf1hrvtek60000 run: │ kind: dataset otype: AnnData │ hash: Sgbj2aSf8AKFs12oJKUxXQ size: 20.9 KB │ branch: main space: all │ created_at: <django.db.models.expressions.DatabaseDefault object at 0x7f606dd18250> created_by: anonymous │ n_observations: 21 └── storage/path: /home/runner/work/lamindb/lamindb/docs/test-transfer/.lamindb/ieJvuTvf1hrvtek60000.h5ad

To validate a spatialdata or any other array-like dataset, you need to construct a Schema. You can do this by composing simple pandera-style schemas: docs.lamin.ai/curate.

Ontologies¶

Plugin bionty gives you >20 public ontologies as SQLRecord registries. This was used to validate the ENSG ids in the adata just before.

import bionty as bt

bt.CellType.import_source() # import the default ontology

bt.CellType.to_dataframe() # your extendable cell type ontology in a simple registry

✓ import is completed!

| uid | name | ontology_id | abbr | synonyms | description | is_locked | created_at | branch_id | space_id | created_by_id | run_id | source_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||

| 3453 | 1ChUsEzDZXWW4B | beam B cell, human | CL:7770006 | None | None | A Trabecular Meshwork Cell Within The Eye'S Tr... | False | 2026-02-05 16:19:44.983000+00:00 | 1 | 1 | 3 | None | 50 |

| 3452 | 5xoxfxIf7WrLdU | beam cell | CL:7770005 | None | None | A Trabecular Meshwork Cell That Is Part Of The... | False | 2026-02-05 16:19:44.983000+00:00 | 1 | 1 | 3 | None | 50 |

| 3451 | 2j5mhhFoV2vBDV | suprabasal cell | CL:7770004 | None | None | An Epithelial Cell That Resides In The Layer(S... | False | 2026-02-05 16:19:44.983000+00:00 | 1 | 1 | 3 | None | 50 |

| 3450 | RBCFqAmkM1oaaZ | beam A cell | CL:7770003 | None | None | A Beam Cell Within The Eye'S Trabecular Meshwo... | False | 2026-02-05 16:19:44.983000+00:00 | 1 | 1 | 3 | None | 50 |

| 3449 | 79Ow7BGPRP018I | juxtacanalicular tissue cell | CL:7770002 | None | None | A Trabecular Meshwork Cell Of The Juxtacanalic... | False | 2026-02-05 16:19:44.983000+00:00 | 1 | 1 | 3 | None | 50 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 3358 | gDJgUmTBv5AHYt | Astro-OLF NN_2 Alk astrocyte (Mmus) | CL:4307054 | None | 5234 Astro-OLF NN_2 | A Astrocyte Of The Mus Musculus Brain. It Is D... | False | 2026-02-05 16:19:44.968000+00:00 | 1 | 1 | 3 | None | 50 |

| 3357 | 51U0BVtjFHQGxE | Astro-OLF NN_2 Slc25a34 astrocyte (Mmus) | CL:4307053 | None | 5233 Astro-OLF NN_2 | A Astrocyte Of The Mus Musculus Brain. It Is D... | False | 2026-02-05 16:19:44.968000+00:00 | 1 | 1 | 3 | None | 50 |

| 3356 | FjYN3z6zMFQ3JV | Astro-OLF NN_1 Stk32a astrocyte (Mmus) | CL:4307052 | None | 5232 Astro-OLF NN_1 | A Astrocyte Of The Mus Musculus Brain. It Is D... | False | 2026-02-05 16:19:44.968000+00:00 | 1 | 1 | 3 | None | 50 |

| 3355 | 8tHAMMeaiLxdaP | Astro-OLF NN_1 Greb1 astrocyte (Mmus) | CL:4307051 | None | 5231 Astro-OLF NN_1 | A Astrocyte Of The Mus Musculus Brain. It Is D... | False | 2026-02-05 16:19:44.968000+00:00 | 1 | 1 | 3 | None | 50 |

| 3354 | 5SkKyhULGbfXWC | Astro-TE NN_5 Adamts18 astrocyte (Mmus) | CL:4307050 | None | 5230 Astro-TE NN_5 | A Astrocyte Of The Mus Musculus Brain. It Is D... | False | 2026-02-05 16:19:44.968000+00:00 | 1 | 1 | 3 | None | 50 |

100 rows × 13 columns

Read more: docs.lamin.ai/manage-ontologies.